Top Machine Learning Algorithms for Real-World Machine Learning

Linear Regression

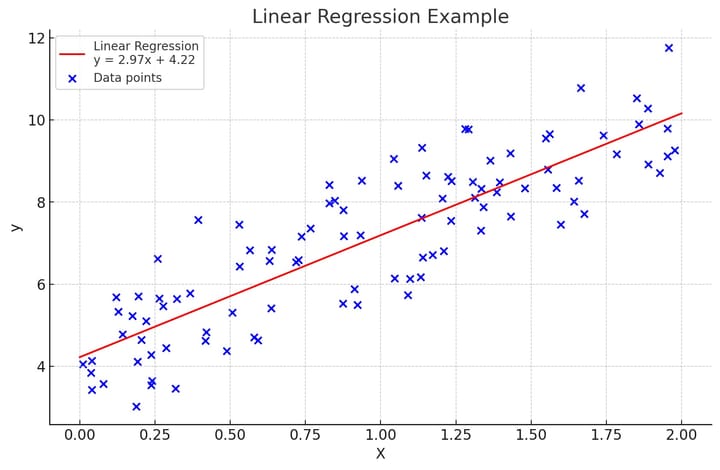

Linear regression is one of the most fundamental and commonly used statistical and machine-learning techniques. At its core, linear regression involves fitting a straight line through a set of data points to be able to make predictions about future data points.

The basic idea behind linear regression is